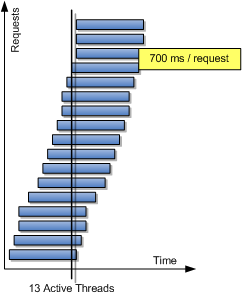

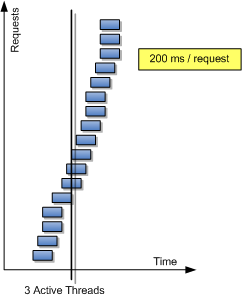

Being fast doesn't make you scalable. But it does mean you can handle more capacity with your current infrastructure. Take a look at this diagram of request handlers.

You can see that it takes 13 request handling threads to process this amount of load. In the next diagram, the requests arrive at the same rate, but in this picture it takes just 200 milliseconds to answer each one.

Same load, but only 3 request handlers are needed at a time. So, shortening the processing time means you can handle more transactions during the same unit of time.

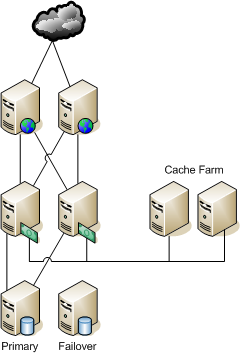

Suppose you're site is built on the classic "six-pack" architecture shown below. As your traffic grows and the site slows, you're probably looking at adding more oomph to the database servers. Scaling that database cluster up gets expensive very quickly. Worse, you have to bulk up both guns at once, because each one still has to be able to handle the entire load. So you're paying for big boxes that are guaranteed to be 50% idle.

Let's look at two techniques almost any site can use to speed up requests, without having the Hulk Hogan and Andre the Giant of databases lounging around in your data center.

Cache Farms

Cache farming doesn't mean armies of Chinese gamers stomping rats and making vests. It doesn't involve registering a ton of domain names, either.

Pretty much every web app is already caching a bunch of things at a bunch of layers. Odds are, your application is already caching database results, maybe as objects or maybe just query results. At the top level, you might be caching page fragments. HTTP session objects are nothing but caches. The net result of all this caching is a lot of redundancy. Every app server instance has a bunch of memory devoted to caching. If you're running multiple instances on the same hosts, you could be caching the same object once per instance.

Caching is supposed to speed things up, right? Well, what happens when those app server instances get short on memory? Those caches can tie up a lot of heap space. If they do, then instead of speeding things up, the caches will actually slow responses down as the garbage collector works harder and harder to free up space.

So what do we have? If there are four app instances per host, then a frequently accessed object---like a product featured on the home page---will be duplicated eight times. Can we do better? Well, since I'm writing this article, you might suspect the answer is "yes". You'd be right.

The caches I've described so far are in-memory, internal caches. That is, they exist completely in RAM and each process uses its own RAM for caching. There exist products, commercial and open-source, that let you externalize that cache. By moving the cache out of the app server process, you can access the same cache from multiple instances, reducing duplication. Getting those objects out of the heap, You can make the app server heap smaller, which will also reduce garbage collection pauses. If you make the cache distributed, as well as external, then you can reduce duplication even further.

External caching can also be tweaked and tuned to help deal with "hot" objects. If you look at the distribution of accesses by ID, odds are you'll observe a power law. That means the popular items will be requested hundreds or thousands of times as often as the average item. In a large infrastructure, making sure that the hot items are on cache servers topologically near the application servers can make a huge difference in time lost to latency and in load on the network.

External caches are subject to the same kind of invalidation strategies as internal caches. On the other hand, when you invalidate an item from each app server's internal cache, they're probably all going to hit the database at about the same time. With an external cache, only the first app server hits the database. The rest will find that it's already been re-added to the cache.

External cache servers can run on the same hosts as the app servers, but they are often clustered together on hosts of their own. Hence, the cache farm.

If the external cache doesn't have the item, the app server hits the database as usual. So I'll turn my attention to the database tier.

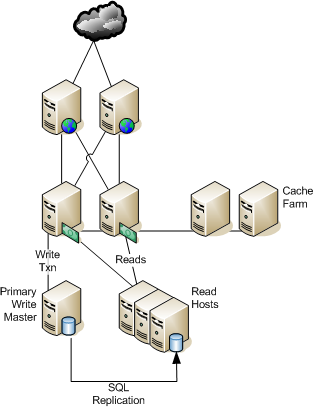

Read Pools

The toughest thing for any database to deal with is a mixture of read and write operations. The write operations have to create locks and, if transactional, locks across multiple tables or blocks. If the same tables are being read, those reads will have highly variable performance, depending on whether a read operation randomly encounters one of the locked rows (or pages, blocks, or tables, depending).

But the truth is that your application almost certainly does more reads than writes, probably to an overwhelming degree. (Yes, there are some domains where writes exceed reads, but I'm going to momentarily disregard mindless data collection.) For a travel site, the ratio will be about 10:1. For a commerce site, it will be from 50:1 to 200:1. There are a lot of variables here, especially when you start doing more effective caching, but even then, the ratios are highly skewed.

When your database starts to get that middle-age paunch and it just isn't as zippy as it used to be, think about offloading those reads. At a minimum, you'll be able to scale out instead of up. Scaling out with smaller, consistent, commodity hardware pleases everyone more than forklift upgrades. In fact, you'll probably get more performance out of your writes once all that pesky read I/O is off the write master.

How do you create a read pool? Good news! It uses nothing more than built-in replication features of the database itself. Basically, you just configure the write master to ship its archive logs (or whatever your DB calls them) to the read pool databases. They spin up the logs to bring their state into synch with the write master.

By the way, for read pooling, you really want to avoid database clustering approaches. The overhead needed for synchronization obviates the benefits of read pooling in the first place.

At this point, you might be objecting, "Wait a cotton-picking minute! That means the read machines are garun-damn-teed to be out of date!" (That's the Foghorn Leghorn version of the objection. I'll let you extrapolate the Tony Soprano and Geico Gecko versions yourself.) You would be correct. The read machines will always reflect an earlier point in time.

Does that matter?

To a certain extent, I can't answer that. It might matter, depending on your domain and application. But in general, I think it matters less often than it seems. I'll give you an example from the retail domain that I know and love so well. Take a look at this product detail page from BestBuy.com. How often do you think each data field on that page changes? Suppose there is a pricing error that needs to be corrected immediately (for some definition of immediately.) What's the total latency before that pricing error will be corrected? Let's look at the end-to-end process.

- A human detects the pricing error.

- The observer notifies the responsible merchant.

- The merchant verifies that the price is in error and determines the correct price.

- Because this is an emergency, the merchant logs in to the "fast path" system that bypasses the nightly batch cycle.

- The merchant locates the item and enters the correct price

- She hits the "publish" button.

- The fast path system connects to the write master in production and updates the price.

- The read pool receives the logs with the update and applies them.

- The read pool process sends a message to invalidate the item in the app servers' caches.

- The next time users request that product detail page, they see the correct price.

That's the best-case scenario! In the real world, the merchant will be in a meeting when the pricing error is found. It may take a phone call or lookup from another database to find out the correct price. There might be a quick conference call to make the decision whether to update the price or just yank the item off the site. All in all, it might take an hour or two before the pricing error gets corrected. Whatever the exact sequence of events, odds are that the replication latency from the write master to the read pool is the very least of the delays.

Most of the data is much less volatile or critical than the price. Is an extra five minutes of latency really a big deal? When it can save you a couple of hundred thousand dollars on giant database hardware?

Summing It Up

The reflexive answer to scaling is, "Scale out at the web and app tiers, scale up in the data tier." I hope this shows that there are other avenues to improving performance and capacity.

References

For more on read pooling, see Cal Henderson's excellent book, "Building Scalable Web Sites: Building, scaling, and optimizing the next generation of web applications".

The most popular open-source external caching framework I've seen is memcached. It's a flexible, multi-lingual caching daemon.

On the commercial side, GigaSpaces provides distributed, external, clustered caching. It adapts to the "hot item" problem dynamically to keep a good distribution of traffic, and it can be configured to move cached items closer to the servers that use them, reducing network hops to the cache.